Productivity Tools

September 16, 2025

Test Driven Development (TDD): A Practical, Modern Guide to Writing Better Software Faster

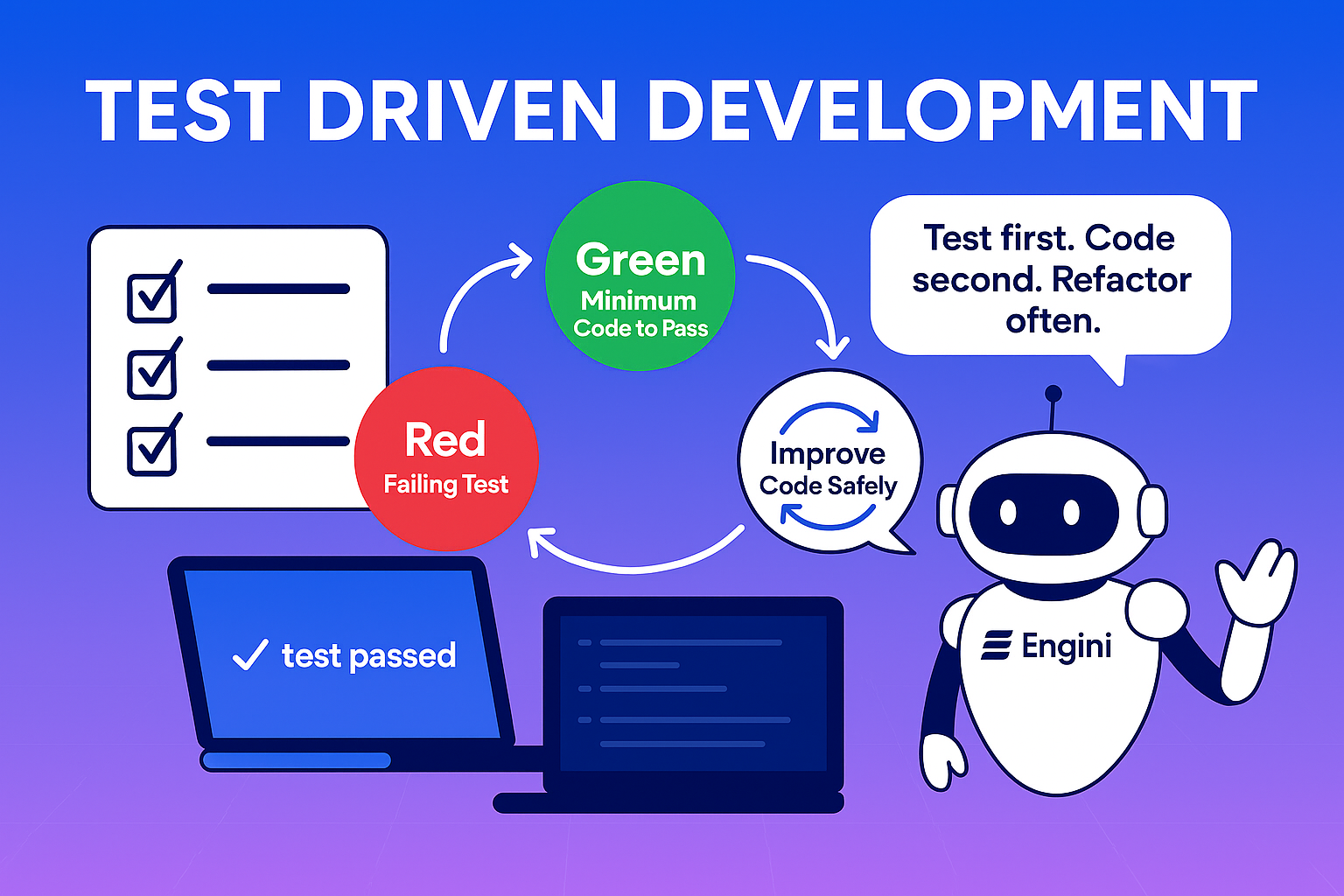

“Write a failing test first.” It sounds backward until you try it. Test Driven Development (TDD) flips the usual order of coding on its head: you specify behavior, prove it’s missing with a failing test, then implement the minimum code to make that test pass. The payoff is a tighter feedback loop, better design, and a living safety net that accelerates future change.

This article is a guide for engineering leaders and senior developers who already value automated testing but want to apply TDD methodically on modern stacks. You’ll learn how TDD works in practice, where it shines (and where it doesn’t), how to adopt it across a team, and which tools make it stick. We’ll also unpack the nuances-mocks vs. fakes, test smells, coverage myths-and offer a simple rollout roadmap you can start using this sprint.

What Is Test Driven Development?

Test-Driven Development (TDD) is a software practice where you first write a small, failing automated test for the next behavior, then the minimum production code to pass it, and finally refactor. This red-green-refactor loop drives incremental design, fast feedback, and a reliable regression safety net.

- Write a failing test

- See it fail (red)

- Write the smallest code to pass (green)

- Run the full suite, all tests green

- Refactor, repeat

Why Test Driven Development Matters?

Teams adopting TDD consistently report several tangible benefits that go beyond “fewer bugs”:

Design clarity. Writing tests first forces you to think about interfaces, inputs/outputs, and failure modes before diving into implementation details. This often leads to simpler, more cohesive APIs and fewer hidden dependencies.

Faster feedback loops. Because you only write enough code to satisfy a failing test, you detect regressions or design mistakes immediately. Short cycles beat long debugging sessions every time.

Change with confidence. As the test suite grows, it forms a powerful regression net. When you refactor or introduce new features, tests highlight unintended side effects early-especially important in complex, integrated systems.

Reduced rework. TDD nudges you toward loosely coupled modules. Loose coupling keeps refactors small and localized, which reduces the cost of change as the codebase grows.

Onboarding and shared understanding. New team members learn expected behaviors by reading tests. This accelerates onboarding and reduces reliance on tribal knowledge.

The Red–Green–Refactor Cycle in Real Life

A healthy TDD cycle is tiny-often just a few minutes:

- Red: Write the smallest failing test that expresses a specific behavior. Keep scope narrow.

- Green: Implement the minimum code to pass the test. Resist adding generalizations “you might need later.”

- Refactor: Improve names, remove duplication, tease apart responsibilities, and clarify intent. The green test guarantees you haven’t broken behavior.

Repeat. The discipline is in keeping cycles short. If you’re stuck in red for more than ten minutes, your slice is probably too big; split it. If green requires a sprawling implementation, you’re likely coding beyond the test’s promise.

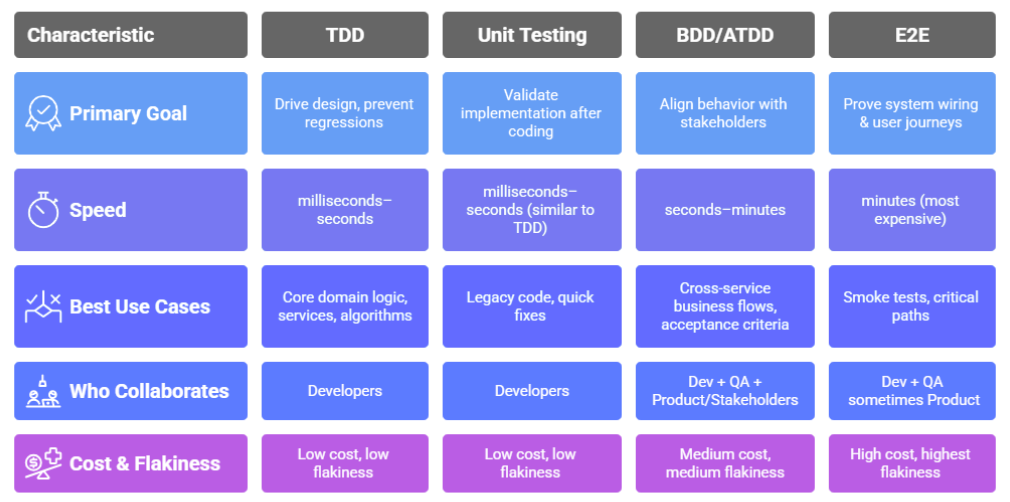

Test Driven Development vs. Unit Testing vs. BDD vs. “Test-Last”

It’s easy to conflate these practices, so let’s distinguish them:

Unit testing is about scope-testing the smallest meaningful pieces of functionality. You can do unit testing with or without TDD.

Test-Last means you write tests after the implementation. This can still be valuable, but it loses the design benefits and early feedback TDD provides.

Behavior-Driven Development (BDD) emphasizes describing behavior in a human-friendly, often domain-specific language. Many teams successfully combine TDD at the unit level with BDD-style specs at the service or acceptance level. TDD defines the micro-behaviors; BDD validates end-to-end business flows.

Takeaway: TDD is a workflow (write tests first), unit testing is a scope, and BDD is a collaboration and specification style. They complement each other.

When TDD Shines-and When It Doesn’t

Best-fit scenarios. TDD thrives when you’re building core domain logic, algorithms, libraries, or services with well-defined boundaries. It’s especially effective in codebases where change is frequent and correctness matters.

Trickier areas. UI-heavy code, asynchronous event handling, and legacy systems without seams are harder. They’re not impossible-just more expensive. In these cases, favor presenter/view-model patterns, hexagonal architecture, and dependency injection to move logic into testable units. For legacy code, start by introducing characterization tests to lock down existing behavior before refactoring.

Not a religion. It’s OK to spike a solution without tests to explore feasibility (call it a “learning spike”). The key is to extract the learning into testable units afterwards.

Foundational Concepts: Collaborators, Test Doubles, and Boundaries

To keep unit tests fast and deterministic, isolate the unit under test from slow or flaky dependencies:

Test doubles come in flavors:

- – Mocks verify interactions (e.g., a method is called with certain arguments).

- – Stubs return canned responses without behavior verification.

- – Fakes are lightweight working implementations (like an in-memory repository).

Use fakes to support richer scenarios without external systems. Save mocks for edges where you truly need interaction verification (e.g., “did we publish the event?”). Overusing mocks leads to brittle tests that mirror implementation details and break on refactor.

Boundaries are where your code meets databases, queues, HTTP services, file systems, clocks, or random number generators. Wrap these in adapter interfaces so your core logic depends on abstractions you control. In tests, swap the real adapter for a fake or stub to keep tests fast and hermetic.

How TDD Fits Modern Stacks

TDD is language-agnostic. The patterns repeat across ecosystems:

Java / Kotlin. JUnit/Jupiter, AssertJ, Mockito, Testcontainers for integration tests, and Spring Boot slices for layered testing. Structure domains using ports-and-adapters to isolate logic.

JavaScript / TypeScript. Vitest or Jest with ts-node/ts-jest, Testing Library for UI contract tests, Playwright for E2E. Favor composition over deep class hierarchies; rely on dependency injection via constructors or factory functions.

Python. pytest with fixtures and parametrization, unittest.mock or pytest-mock for doubles. Extract side effects into gateway classes (e.g., EmailService, PaymentsGateway) so core functions remain pure and easy to test.

.NET (C#/F#). xUnit/NUnit, FluentAssertions, NSubstitute/Moq. Leverage records/immutable types for clarity and easier reasoning under test.

Across all stacks, keep your unit tests in the same repo as the code, run them locally in seconds, and wire them into your CI pipeline so every commit exercises the suite.

Measuring TDD’s ROI Without Gaming the Metrics

Beware vanity metrics. Line coverage is an indicator, not a goal. Teams chasing 100% coverage often write trivial tests that add maintenance cost without catching meaningful bugs.

Instead, track outcomes aligned to business value and flow efficiency:

- – Change failure rate: Do releases roll back less often after adopting TDD?

- – Lead time for changes: Do features move from merge to production faster due to fewer rework cycles?

- – Mean time to recovery: Do regressions surface sooner and get fixed quicker thanks to clear tests?

- – Escaped defects: Are customer-impacting bugs decreasing over time?

Pair these with qualitative signals: new hire ramp-up speed, refactor confidence, and the ease of reasoning about code. TDD’s impact shows up as smoother flow, not just bigger coverage numbers.

Adopting Test Driven Development: Rollout Plan

Rolling TDD out team-wide works best in deliberate stages:

Start with a pilot. Pick a product area with active development, engaged maintainers, and reasonable boundaries-ideally not the gnarliest legacy subsystem. Define explicit success criteria (e.g., reduce escaped defects in that area by 30% across two releases, cut PR rework cycles by 20%).

Set a cadence. Run a short TDD dojo (90 minutes weekly for 4–6 weeks) pairing senior and mid-level developers on a focused kata. The goal is muscle memory: small red tests, minimal green code, mindful refactor.

Introduce seams in legacy code. For older modules, add characterization tests first. Identify a chokepoint (e.g., a repository or service interface), introduce an abstraction, and begin testing the core logic via a fake adapter. Refactor iteratively.

Update team working agreements. Agree on boundaries: “No new code enters core/ without a failing test,” “Unit test runtime under 2 minutes,” and “Business logic depends only on our ports, never on concrete adapters.”

Build the happy path, then branch. Write tests for the main flow, then add tests for edge cases and error handling. Avoid pursuing every hypothetical; focus on credible risks and real behavior.

Pair and mob strategically. Pairing during the pilot accelerates adoption and aligns on style. Use mob sessions for knotty refactors where collective thinking helps.

Tooling and CI/CD Integration

TDD sticks when the path of least resistance is to run tests constantly. Invest in:

- – Fast local runs. Use watch modes (e.g., vitest –watch, pytest -f) and pre-configured IDE run configs.

- – Representative test data. Create factories/builders for domain objects so tests are expressive and intent-revealing.

- – Layered test suite. Fast, isolated unit tests at the base; a thin layer of integration tests for boundary contracts; and a few critical end-to-end flows to prove wiring.

- – CI gates that help, not hinder. Fail fast on unit tests, run integration/E2E in parallel, publish test reports, and surface flaky tests automatically.

- – Infrastructure for collaboration. Test coverage diff on PRs, pre-commit hooks, and convention-enforcing linters (naming, file layout, fixture placement).

Keep your feedback loop sacred. If the unit test suite ever creeps beyond a couple of minutes, profile and prune: parallelize, mock slow boundaries, or split integration concerns out of the unit layer.

Common Anti-Patterns

Mock explosion. If every test asserts five interactions with three mocks, you’re testing implementation details instead of behavior.

Fix: promote fakes and validate outputs over call counts.

Giant test fixtures. When setup spans dozens of lines, the design probably packs too much responsibility into one place.

Fix: refactor toward smaller collaborators and use builders to create concise, intention-revealing test data.

Over-generalizing in green. Writing abstractions “just in case” slows you down.

Fix: code only to satisfy today’s failing test; refactor toward generality when a second test demands it.

UI tests for logic. Exercising business rules through the UI is slow and flaky.

Fix: isolate logic in pure modules and test it at the unit level; keep UI tests thin.

Coverage worship. Chasing 100% encourages trivial tests.

Fix: track outcome metrics and be comfortable with lower coverage where code is trivial or purely data.

Designing for Testability: Hexagonal Architecture in a Nutshell

Hexagonal (Ports and Adapters) Architecture cleanly separates core logic (domain) from infrastructure (HTTP, DB, queues). The core depends on ports (interfaces) that define what it needs; adapters implement those ports for real systems.

With TDD, you implement core behaviors against fake adapters first (fast), then write integration tests to prove real adapters conform to port contracts. This gives you the speed of unit tests without losing real-world assurance.

Refactoring with Confidence

TDD’s quiet superpower is how it enables refactoring. The refactor step is not optional-it’s the heart of sustainable code. Each green test is an invitation to improve names, split functions, invert dependencies, or extract modules. Over time, the codebase stays supple rather than calcifying into patterns chosen hastily months ago.

A useful habit: after every two or three green cycles, spend a minute hunting duplication-either duplicated logic or duplicated knowledge (the same business rule expressed in multiple places). Consolidate it in one well-named function or object. Your future self will thank you.

Testing method comparison

Use TDD and unit tests for fast feedback; reserve BDD/ATDD for shared behavior and E2E for critical paths

A Day-in-the-Life Example

Imagine you’re adding tiered pricing to a subscription service. Start by writing a failing test for calculating the monthly charge given a plan, usage, and discounts. Keep I/O out of it-no databases, no HTTP calls-just pure calculation:

- Red: A test asserts that “Pro plan with 12,000 events and a 10% discount totals $X.” It fails-no calculator exists yet.

- Green: Create PricingCalculator.total(plan, usage, discount) and implement the smallest logic to pass the test.

- Refactor: Improve naming, extract constants, and clarify tier boundaries.

Next, add edge cases: free tier thresholds, prorations, and overage rounding. As these pass, you refactor duplication and isolate tricky bits (e.g., rounding) behind well-named helpers. Only when the pricing logic is solid and well-tested do you wire in adapters: fetching plans from the DB, reading usage metrics from a service, and emitting invoices. Integration tests verify those adapters adhere to contracts; a small E2E confirms the checkout flow still works.

You end with:

- – A crisp, test-driven pricing module.

- – A few integration tests for adapters.

- – One or two E2E tests for the happy path.

This layered approach keeps feedback fast and failures localized.

How Much TDD Is “Enough”?

You don’t need TDD for everything. For glue code or trivial DTOs, test-last or even no tests may be fine. The heuristic: if a bug here would be expensive or embarrassing, or if you expect change, TDD it. If not, keep it lightweight. The goal is sustainable delivery, not dogma.

Governance and Team Agreements That Encourage TDD

TDD flourishes in teams with shared norms:

Definition of Done (DoD): Every new behavior has a corresponding passing test; tests are readable, isolated, and descriptive.

Code review standards: Reviewers assess tests first-do they express behavior clearly? Can they fail for the right reasons? Are they resistant to refactors?

Naming conventions: Tests read like prose-should_apply_discount_for_annual_plan. Helper functions and factories use domain language, not technical jargon.

Refactoring budget: Teams explicitly reserve time for refactor steps within sprints. Refactoring is not “extra work”; it is the work.

Security and Reliability Considerations

TDD helps reliability by making failure modes explicit. Consider writing tests for security-relevant behaviors: authentication flows, authorization checks for sensitive operations, and safe handling of malformed input. For distributed systems, test retry/backoff logic in isolation with fakes. You won’t replace formal security testing, but you will catch whole classes of logic errors before they escape.

Conclusion

Test Driven Development is a practical, repeatable way to build better software with fewer surprises. By writing tests first, you shape APIs thoughtfully, protect yourself against regressions, and move faster with confidence. Start small: pick one module, run disciplined red-green-refactor cycles, and wire tests into your CI so they run constantly. As you expand to legacy areas with characterization tests and ports-and-adapters seams, you’ll feel the compounding benefits-cleaner designs, quicker changes, happier on-calls.

FAQ

Is TDD only for unit tests? Test pyramid

Mostly. Test‑Driven Development targets fast, developer‑facing unit tests. You can also drive

integration/contract tests at service boundaries using fakes or in‑memory adapters, but end‑to‑end (E2E) tests are rarely

test‑first because they’re slower and more flaky. Aim for a healthy pyramid: many fast units, a few contracts, very few E2E.

Do we need 100% code coverage for TDD to work?

No. Coverage is a signal, not a goal. Prioritize critical paths and behavioral risks. Pair line coverage with

mutation testing or risk‑based checks to ensure tests are meaningful instead of chasing a percentage.

Won’t TDD slow the team down?

There’s a short learning curve, but teams usually ship with fewer defects, spend less time debugging, and

refactor safely. The red–green–refactor loop trades long bug hunts for continuous feedback, which speeds delivery over time.

How do we apply TDD on legacy code?

Start with characterization tests to capture current behavior. Create seams (e.g., ports & adapters,

dependency inversion), extract collaborators behind interfaces, then use TDD for new or refactored modules inside those seams.

What’s the difference between TDD and BDD/ATDD?

TDD drives design with small, developer‑level tests written first. BDD/ATDD describe behavior in shared domain language

(often Gherkin/Cucumber) and operate at the acceptance level. They’re complementary—use BDD/ATDD for shared requirements and TDD for code design.

Like what you see? Share with a friend.

Itay Guttman

Co-founder & CEO at Engini.io

With 11 years in SaaS, I've built MillionVerifier and SAAS First. Passionate about SaaS, data, and AI. Let's connect if you share the same drive for success!

Share with your community

.png)

Comments