Productivity Tools

August 28, 2025

Integration Testing Guide: Types, Examples, Best Practices

Integration testing sits at the heart of a reliable and high quality software delivery process. Positioned after unit testing and before full system testing, it ensures that individual components work together smoothly. In this guide, you’ll discover what integration testing is, why it matters in today’s fast-moving development systems, how to implement it effectively, the most common approaches, industry trends shaping its future, and practical guidance to enhance your testing strategy.

What Is Integration Testing?

Integration testing verifies that independently tested modules, APIs, or services work together through their real interfaces catching contract mismatches, data-format issues, configuration errors, and third-party integration bugs. It sits between unit and system/E2E testing, proving that components communicate, share data, and produce the expected side-effects.

Why Integration Testing Matters

As applications grow more complicated especially with microservices, distributed systems, and API-driven architectures between modules interactions become a common source of bugs. Integration issues might not surface during unit testing and can lead to broken features, data issues performance slowdowns, or system failures in production. Early integration testing helps:

- – Detect interface mismatches sooner, such as serialization/deserialization errors or schema mismatches.

- – Reduce debugging costs, failures appear closer to the source with a smaller impact.

- – Improve confidence in releases, making sure that components work well together under realistic conditions.

- – Find interface defects early: schema/serialization problems and auth/permission gaps surface before release.

- – Increase release confidence: realistic environments + contracts = fewer repeat bugs.

Common Integration Testing Approaches

Top‑Down Testing

This method starts with high-level modules and gradually integrates lower-level units. Lower modules are simulated with stubs-simplified replacements that mimic their behavior.

Pros: Early validation of top layer logic, faster finding of design flaws.

Cons: Stubs can be complex to manage, and lower layer behavior might be barely tested.

Bottom‑Up Testing

Opposite to top‑down, this approach begins with low-level modules and works upward. Drivers simulate higher-level contexts or calls.

Pros: Core logic at lower layers is validated first.

Cons: Higher-level interactions are pushed back, potentially delaying finidng of communication issues.

Sandwich (Hybrid) Testing

This strategy combines top‑down and bottom‑up approaches simultaneously-middle layer modules are tested by testing both directions.

Pros: Balanced coverage across layers, parallel testing by separate teams.

Cons: Higher setup complexity, requires working together.

Incremental Testing

Modules are integrated one by one or in small groups, with tests executed after each integration step.

Pros: Easier defect isolation, continuous feedback, reduced debugging scope.

Cons: Management extra work for incremental setups and test order.

Big‑Bang Testing

All modules are integrated at once and tested as a complete system.

Pros: Simple to execute in small or tightly scoped projects.

Cons: Poor traceability of errors, high debugging complexity, risk of masking defects.

Integration Testing Approaches Use vs Avoid

| Criteria | Top-Down | Bottom-Up | Hybrid (Sandwich) | Incremental | Big-Bang |

|---|---|---|---|---|---|

| Use when |

|

|

|

|

|

| Avoid when |

|

|

|

|

|

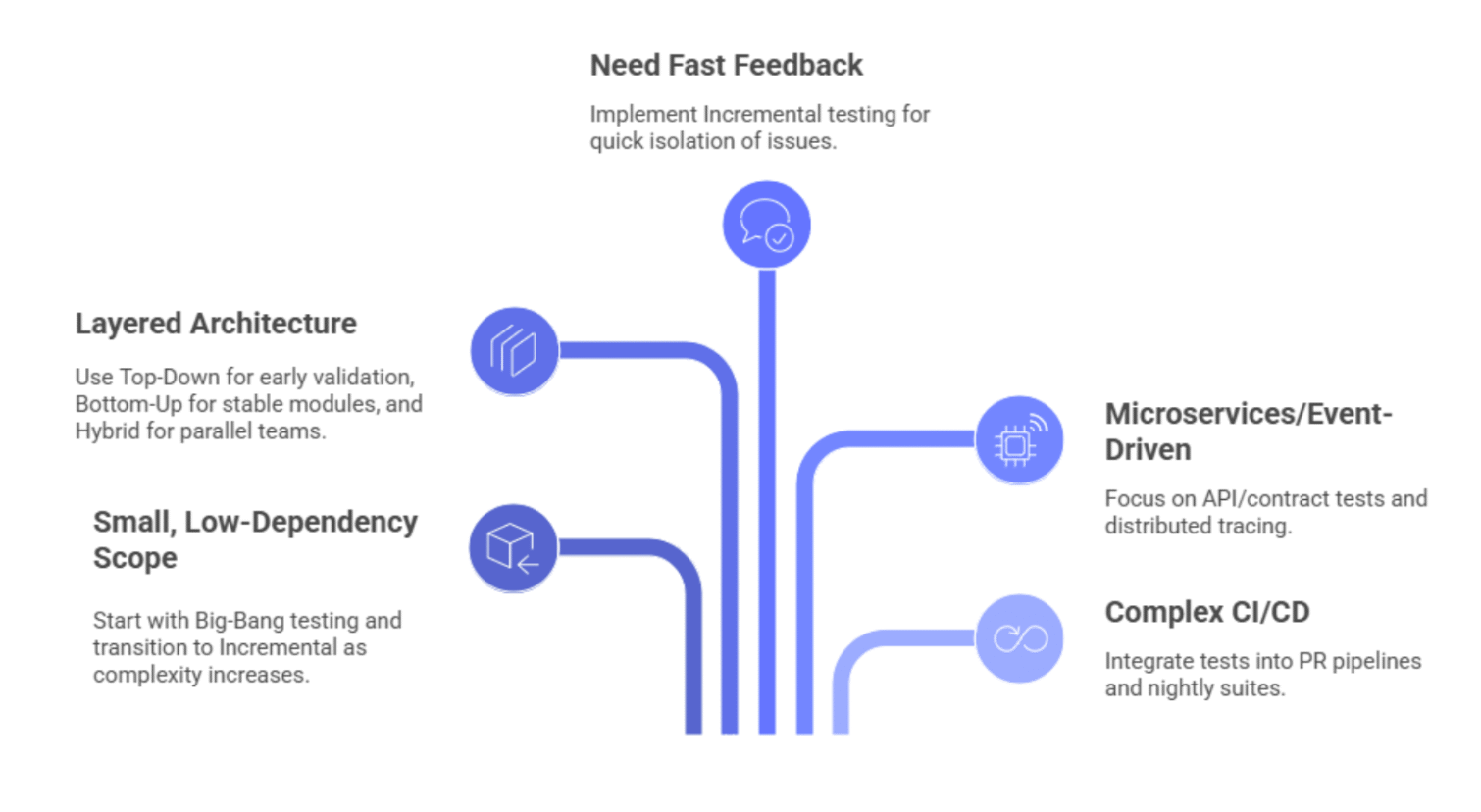

How to read: Top-Down for early flow validation, Bottom-Up for stable foundations, Hybrid for parallel teams, Incremental for fast CI and clean isolation, Big-Bang only for tiny scopes.

Implementation Best Practices

While knowing the methodologies is essential, effective implementation will determine success. Here are key best practices to follow:

Use Clean, Isolated Test Environments

- –Employ setup and teardown routines, like database snapshots or rollbacks, to reset state before each test to avoid cross-test contamination.

- –For microservices, use isolated sandbox environments or local releases using containerized stacks (e.g., Docker Compose, Kubernetes namespaces).

Incorporate API Contract Testing

API testing plays a critical role in integration testing-especially in microservices or service-oriented architectures. Validate that services adhere to agreed-upon contracts, expected data formats, and error handling behavior.

Leverage Automation & CI/CD Integration

Automate integration tests and incorporate them into your continuous integration pipeline so they run after unit tests but before releases or staging pushes. Automation ensures consistency and reduces manual overhead.

Parallelize Testing While Managing Dependencies

Design your test suite to run independent integration tests in parallel for speed. Controlled test data versioning and isolation helps manage dependencies.

Use Monitoring and Observability Tools

Apply logging, tracing (e.g., OpenTelemetry), and monitoring during test runs. This insight aids debugging by tracking request flows across services and finding failure sources.

Emerging Trends in Integration Testing

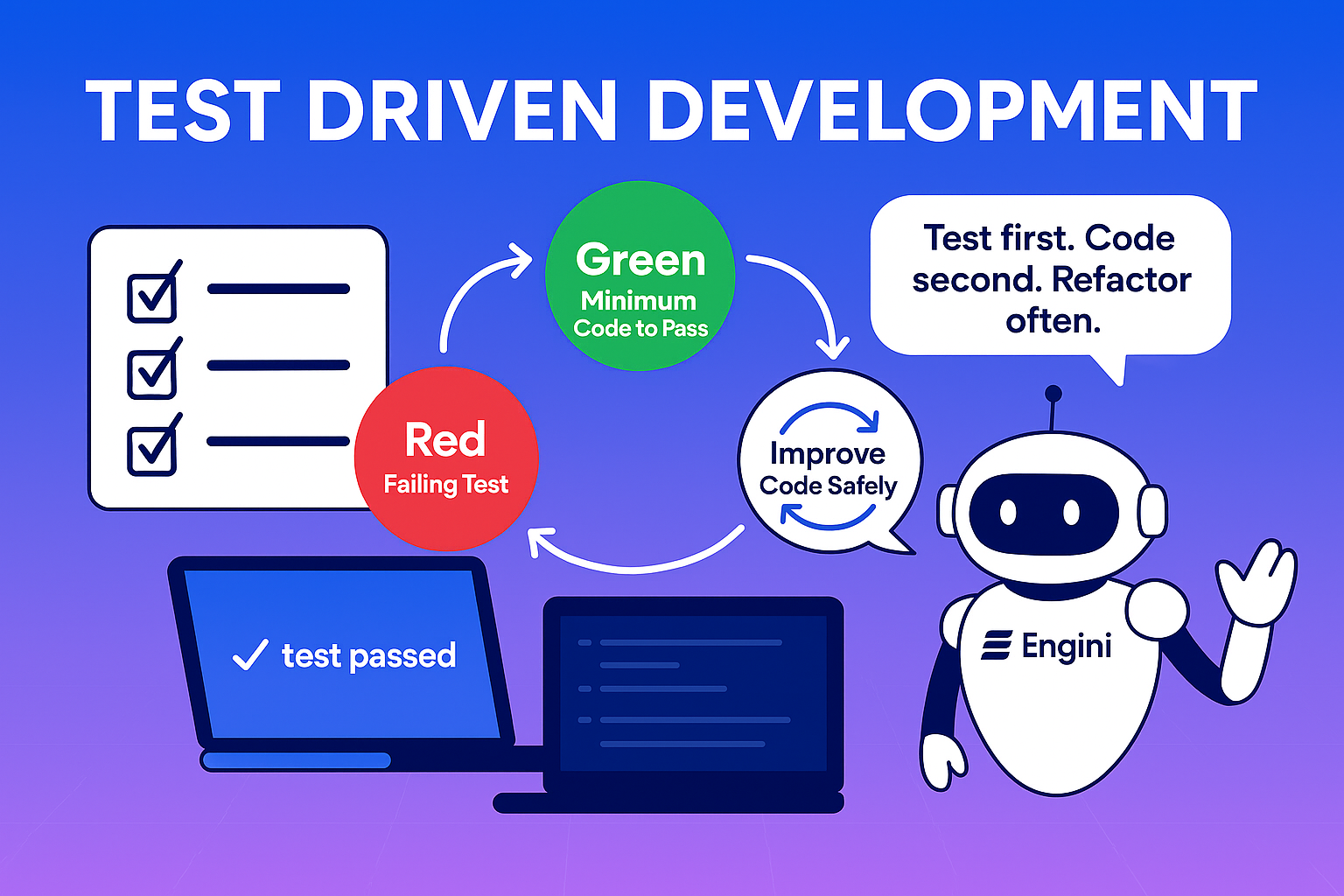

Shift‑Left Testing

Integration testing is moving earlier in the development lifecycle to catch inter module issues sooner. This shift-left approach shortens feedback loops and aligns with agile and DevOps practices.

AI-Enhanced Testing

Artificial intelligence and machine learning are being incorporated into integration testing-tools can now auto-generate test cases, predict flaky integration paths, and even recover from broken scripts in real time.

Autonomous Testing Agents

Emerging agentic AI systems can manage test execution, scheduling, prioritizing based on risk, and even remediating minor failures without human intervention-powering a more self-sustaining test ecosystem.

Cloud-native & CI/CD Orchestration

Distributed test environments in the cloud (e.g., ephemeral infrastructure spun up per pipeline run) support scalable and parallel integration testing. This ties straight into modern CI/CD that emphasizes comprehensive testing before releases.

Choosing the Right Approach for Your Project

Use Top-Down for layered UX flows, Bottom-Up for stable modules, Hybrid for parallel teams, Incremental for fast CI feedback, and Big-Bang only for very small, loosely coupled scopes.

Sample Integration Testing Workflow

- Start from green unit tests.

- Spin up real deps (DB/queue/third-party sandboxes) in a clean, ephemeral env.

- Integrate incrementally: add A⇄B, test then add C, test end-to-end.

- Assert contracts & side effects: payload schema, auth, error paths; check DB rows/messages.

- Capture telemetry: logs/traces/metrics for each run.

- Tear down + reset state.

- Automate in CI: run on each merge; publish reports; block release on failures.

Conclusion

Integration testing is the essential bridge between separate unit tests and real-world system success. By validating how modules, services, and APIs interact, you reduce the risk of faults in production and elevate the reliability of your software.

Best practices-such as clean environments, test automation, observability, and smart sequencing-are vital. Incremental or hybrid testing approaches often provide the strongest balance of diagnostic clarity and efficiency. The field is evolving fast: expect AI-powered test generation, autonomous agents, and cloud-native orchestration to increasingly influence how teams implement integration testing.

Commit to a thoughtful, scalable integration strategy, start small, automate deeply, and build confidence in every release.

FAQ

Q1: When is incremental testing better than big‑bang?

Incremental testing is preferable when you want clearer visibility into failures, manageable integration steps, and faster feedback. Big‑Bang may work occasionally but risks confusion during fault isolation.

Q2: Should I always simulate missing modules with stubs/drivers?

Only when the real modules are unavailable or costly to set up. Simulations are helpful during early development but should be gradually replaced with actual components for real behavior validation.

Q3: How can I reset test states effectively?

Use database snapshots, rollback mechanisms, setup/teardown routines, container restart, or fresh sandbox deployments to ensure each test runs in a clean environment.

Q4: Is API testing part of integration testing or separate?

API testing is a subset of integration testing-critical for validating inter-service communication. It should be integrated into your overall strategy to catch interface related issues.

Q5: How are AI tools impacting integration testing?

AI tools are now auto-generating test cases, predicting integration paths likely to fail, enabling self healing test suites, and optimizing test selection and prioritization reducing manual effort and increasing resilience.

Like what you see? Share with a friend.

Itay Guttman

Co-founder & CEO at Engini.io

With 11 years in SaaS, I've built MillionVerifier and SAAS First. Passionate about SaaS, data, and AI. Let's connect if you share the same drive for success!

Share with your community

.png)

Comments